I am sure Googlers should be enjoying this: hardly can they say a word, there follows a wealth of guessed and speculations. This time Matt Cutts is said to have mentioned that their 200 variables in Google algorithm and already plenty of people started looking for them.

Anyway, I stumbled across this forum thread and made up my mind to share this discussion at SEJ by providing my own list of variables (the SEO perspective, please note that, like one of my best friends pointed out, this post is not intended as the list of search algorithm variables but rather as the list of SEO parameters) and asking you to contribute.

Currently there are fewer than 120 130 variables in the list, try to make it 200 :)

Update: I created a Google Wave for that: please Tweet or email me to get in there and participate!

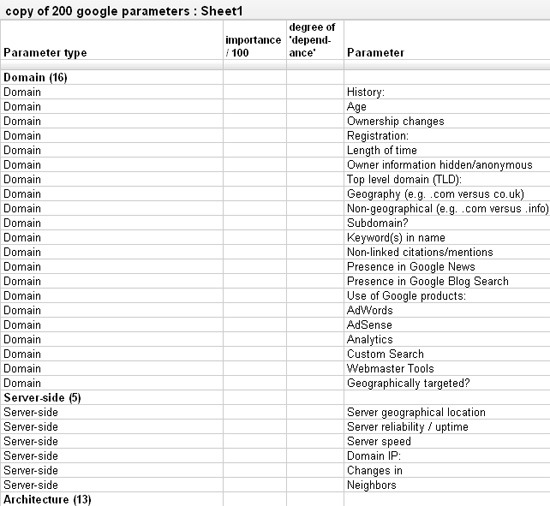

Update: Mark Nunney, a very awesome and smart SEO and also blogger at Wordstream blog, who has joined our collaborative Wave on that, has put together a great tabl:

I added an ‘importance column’ (we could vote on that) and a ‘degree of dependance’ column. Poor phrase but important point: Lists like these miss the point that many ‘big’ ‘parameters’ do nothing on their own.Eg., brand or site rep are useless on their own – CNN and the BCC do not come top for everything.

I’ve published the copy here (We think we will set up a poll to vote for the variables in empty columns):

Parameters we are almost sure (with different level of confidence) to be included in the algorithm (for your convenience I linked some of them to our previous discussions on the topic):

Domain: 13 factors

1. Domain age;

2. Length of domain registration;

3. Domain registration information hidden/anonymous;

4. Site top level domain (geographical focus, e.g. com versus co.uk);

5. Site top level domain (e.g. .com versus .info);

6. Sub domain or root domain?

7. Domain past records (how often it changed IP);

8. Domain past owners (how often the owner was changed)

9. Keywords in the domain;

10. Domain IP;

11. Domain IP neighbors;

12. Domain external mentions (non-linked)

13. Geo-targeting settings in Google Webmaster Tools

Server-side: 2 factors

1. Server geographical location;

2. Server reliability / uptime

Architecture: 8 factors

1. URL structure;

2. HTML structure;

3. Semantic structure;

4. Use of external CSS / JS files;

5. Website structure accessibility (use of inaccessible navigation, JavaScript, etc);

6. Use of canonical URLs;

7. “Correct” HTML code (?);

8. Cookies usage;

Content: 14 factors

1. Content language

2. Content uniqueness;

3. Amount of content (text versus HTML);

4. Unlinked content density (links versus text);

5. Pure text content ratio (without links, images, code, etc)

6. Content topicality / timeliness (for seasonal searches for example);

7. Semantic information (phrase-based indexing and co-occurring phrase indicators)

8. Content flag for general category (transactional, informational, navigational)

9. Content / market niche

10. Flagged keywords usage (gambling, dating vocabulary)

11. Text in images (?)

12. Malicious content (possibly added by hackers);

13. Rampant mis-spelling of words, bad grammar, and 10,000 word screeds without punctuation;

14. Use of absolutely unique /new phrases.

Internal Cross Linking: 5 factors

1. # of internal links to page;

2. # of internal links to page with identical / targeted anchor text;

3. # of internal links to page from content (instead of navigation bar, breadcrumbs, etc);

4. # of links using “nofollow” attribute; (?)

5. Internal link density,

Website factors: 7 factors

1. Website Robots.txt file content

2. Overall site update frequency;

3. Overall site size (number of pages);

4. Age of the site since it was first discovered by Google

5. XML Sitemap;

6. On-page trust flags (Contact info ( for local search even more important), Privacy policy, TOS, and similar);

7. Website type (e.g. blog instead of informational sites in top 10)

Page-specific factors: 9 factors

1. Page meta Robots tags;

2. Page age;

3. Page freshness (Frequency of edits and

% of page effected (changed) by page edits);

4. Content duplication with other pages of the site (internal duplicate content);

5. Page content reading level; (?)

6. Page load time (many factors in here);

7. Page type (About-us page versus main content page);

8. Page internal popularity (how many internal links it has);

9. Page external popularity (how many external links it has relevant to other pages of this site);

Keywords usage and keyword prominence: 13 factors

1. Keywords in the title of a page;

2. Keywords in the beginning of page title;

3. Keywords in Alt tags;

4. Keywords in anchor text of internal links (internal anchor text);

5. Keywords in anchor text of outbound links (?);

6. Keywords in bold and italic text (?);

7. Keywords in the beginning of the body text;

8. Keywords in body text;

9. Keyword synonyms relating to theme of page/site;

10. Keywords in filenames;

11. Keywords in URL;

12. No “Randomness on purpose” (placing “keyword” in the domain, “keyword” in the filename, “keyword” starting the first word of the title, “keyword” in the first word of the first line of the description and keyword tag…)

13. The use (abuse) of keywords utilized in HTML comment tags

Outbound links: 8 factors

1. Number of outbound links (per domain);

2. Number of outbound links (per page);

3. Quality of pages the site links in;

4. Links to bad neighborhoods;

5. Relevancy of outbound links;

6. Links to 404 and other error pages.

7. Links to SEO agencies from clients site

8. Hot-linked images

Backlink profile: 21 factors

1. Relevancy of sites linking in;

2. Relevancy of pages linking in;

3. Quality of sites linking in;

4. Quality of web page linking in;

5. Backlinks within network of sites;

6. Co-citations (which sites have similar backlink sources);

7. Link profile diversity:

1. Anchor text diversity;

2. Different IP addresses of linking sites,

3. Geographical diversity,

4. Different TLDs,

5. Topical diversity,

6. Different types of linking sites (logs, directories, etc);

7. Diversity of link placements

8. Authority Link (CNN, BBC, etc) Per Inbound Link

9. Backlinks from bad neighborhoods (absence / presence of backlinks from flagged sites)

10. Reciprocal links ratio (relevant to the overall backlink profile);

11. Social media links ratio (links from social media sites versus overall backlink profile);

12. Backlinks trends and patterns (like sudden spikes or drops of backlink number)

13. Citations in Wikipedia and Dmoz;

14. Backlink profile historical records (ever caught for link buying/selling, etc);

15. Backlinks from social bookmarking sites.

Each Separate Backlink: 6 factors

1. Authority of TLD (.com versus .gov)

2. Authority of a domain linking in

3. Authority of a page linking in

4. Location of a link (footer, navigation, body text)

5. Anchor text of a link (and Alt tag of images linking)

6. Title attribute of a link (?)

Visitor Profile and Behavior: 6 factors

1. Number of visits;

2. Visitors’ demographics;

3. Bounce rate;

4. Visitors’ browsing habits (which other sites they tend to visit)

5. Visiting trends and patterns (like sudden spiked in incoming traffic)

6. How often the listing is clicked within the SERPs (relevant to other listings)

Penalties, Filters and Manipulation: 12 factors

1. Keyword over usage / Keyword stuffing;

2. Link buying flag

3. Link selling flag;

4. Spamming records (comment, forums, other link spam);

5. Cloaking;

6. Hidden Text;

7. Duplicate Content (external duplication)

8. History of past penalties for this domain

9. History of past penalties for this owner

10. History of past penalties for other properties of this owner (?)

11. Past hackers’ attacks records

12. 301 flags: double re-directs/re-direct loops, or re-directs ending in 404 error

More Factors (6):

1. Domain registration with Google Webmaster Tools;

2. Domain presence in Google News;

3. Domain presence in Google Blog Search;

4. Use of the domain in Google AdWords;

5. Use of the domain in Google Analytics;

6. Business name / brand name external mentions.

Read more: http://www.searchenginejournal.com/200-parameters-in-google-algorithm/15457/#ixzz1In5IDuTW

seo blog

Wednesday, April 6, 2011

Wednesday, March 16, 2011

Global Strategies For Google's Panda Update

If all your global sites have low quality links, duplicate content, and are on a subdirectory, then Google's Panda update will likely cause you problems. But don't give up and throw in the towel. You can still get your act together.

This article will give you some insight on some things you can do to mitigate any risk you might be facing and provide some ideas on how to improve your global pages now that up to 20 percent of your competitors may have disappeared.

Assessing Your Pain

Any global search engine optimization (SEO) doctor will first ask you where you feel the most pain. Identifying rank/traffic loss per country is a key strategy. Your site may have lost ranking because of something other than what you initially thought.

Panda's significance is how it views content and links, specifically similar or duplicate content and poor quality links. A thorough assessment of each one of your global sites will give you some insight into where to start and what to start with.

Reviving Your Content

If you've lost positioning with pages that share the same language but didn't lose positioning from your pages that have their own languages, then chances are you got bit by the Panda due to duplicate content.

Remember, duplicate content isn't only because of your English pages. It could also be a conflict between other languages, such as Brazilian Portuguese vs. Portugal's Portuguese or even between Austrian German and German spoken in Germany.

Deduplication will be necessary. Have a content transcriber in your target country re-write the content in your source country. It's time consuming, but well worth it.

Mapping the same keyword to the content is fine, however you might want to de-duplicate your URLs, reduce the amount of AdSense ads you have placed within the content (if any), and while you're at it, make sure to visit Google Webmaster Tools. Google's tools now do a pretty good job geotargeting your URL structures, so if you have a UK subdirectory, telling Google where it's at will help its spiders understand that the UK site is a local standalone site and not part of a content scheme.

Resuscitating Your Link Building Strategy

On the other hand, if you lost positioning and traffic from all of your global sites, it could be due to your link building tactics, especially if you use a subdirectory structure instead of ccTLDs.

A subdirectory structure (e.g., www.example.com/FR/) can face a number of different issues. If you had a link farm in just one country for example, and that link farm pointed to one of your pages, it's likely your whole site would be affected. If you have low quality links pointing to your duplicate UK pages, your U.S. site may suffer.

A ccTLD structure, if you can do it, would help you out a lot at this point. A URL structure like www.example.fr improves trust and engagement with your target audience, and also helps legitimate local link builders point directly to the "correct" site rather than a folder. If you have a subdirectory, make sure to tell Google about it through Webmaster Tools.

The key to it all is planning on making each site useful, relevant and as local as possible to your target market. The days of creating useless duplicated content and participating in link farms are now over.

This article will give you some insight on some things you can do to mitigate any risk you might be facing and provide some ideas on how to improve your global pages now that up to 20 percent of your competitors may have disappeared.

Assessing Your Pain

Any global search engine optimization (SEO) doctor will first ask you where you feel the most pain. Identifying rank/traffic loss per country is a key strategy. Your site may have lost ranking because of something other than what you initially thought.

Panda's significance is how it views content and links, specifically similar or duplicate content and poor quality links. A thorough assessment of each one of your global sites will give you some insight into where to start and what to start with.

Reviving Your Content

If you've lost positioning with pages that share the same language but didn't lose positioning from your pages that have their own languages, then chances are you got bit by the Panda due to duplicate content.

Remember, duplicate content isn't only because of your English pages. It could also be a conflict between other languages, such as Brazilian Portuguese vs. Portugal's Portuguese or even between Austrian German and German spoken in Germany.

Deduplication will be necessary. Have a content transcriber in your target country re-write the content in your source country. It's time consuming, but well worth it.

Mapping the same keyword to the content is fine, however you might want to de-duplicate your URLs, reduce the amount of AdSense ads you have placed within the content (if any), and while you're at it, make sure to visit Google Webmaster Tools. Google's tools now do a pretty good job geotargeting your URL structures, so if you have a UK subdirectory, telling Google where it's at will help its spiders understand that the UK site is a local standalone site and not part of a content scheme.

Resuscitating Your Link Building Strategy

On the other hand, if you lost positioning and traffic from all of your global sites, it could be due to your link building tactics, especially if you use a subdirectory structure instead of ccTLDs.

A subdirectory structure (e.g., www.example.com/FR/) can face a number of different issues. If you had a link farm in just one country for example, and that link farm pointed to one of your pages, it's likely your whole site would be affected. If you have low quality links pointing to your duplicate UK pages, your U.S. site may suffer.

A ccTLD structure, if you can do it, would help you out a lot at this point. A URL structure like www.example.fr improves trust and engagement with your target audience, and also helps legitimate local link builders point directly to the "correct" site rather than a folder. If you have a subdirectory, make sure to tell Google about it through Webmaster Tools.

The key to it all is planning on making each site useful, relevant and as local as possible to your target market. The days of creating useless duplicated content and participating in link farms are now over.

Writing about SEO vs. doing SEO

After stewing on this for quite some time, I think I’m finally ready to expound my thoughts on this matter in a manner befitting both sides of the discussion. In the SEO (Search Engine Optimization) industry, there are two camps on either extreme: Those who write about SEO and those who actually do SEO. Yes, they both bleed into one another which typically breeds results-based (as opposed to theory-based) posts about SEO, but the two extremes don’t necessarily have to be at odds with one another despite some harsh opinions on the side of those who actually do SEO.

I will be the first to admit that I do more writing about SEO than doing SEO these days, but because I have done SEO before and continue to do it in some form or fashion, I’m able to reach into my experience and put myself into positions of making use of the things I theorize or read about. As such, I always look to create clear discernment between when I write something based on theory versus when I write something based on experience. I feel that if more people who write about SEO would be more clear about the perspective from which they write, there would be less animosity between them and the doers of SEO who tend to know what really works, what doesn’t work, and when questions of ethics are actually warranted.

Since I have done SEO for a living before, I know that successful SEO typically comes down to the same rudimentary steps. Sure, there are always new theories and applications, but most all of them are simply extensions of the basics you’re already familiar with and most likely implementing with success (if you’re doing SEO for a living, that is). Not to mention, if you’re an SEO project manager and you’re juggling numerous clients, you don’t always have the mental capacity to sit down and constantly brainstorm/theorize new ideas. Heck, most of the time, you don’t even need to — especially if your toolkit is already extensive.

So, as a doer of SEO, I empathize with those who call “BS!” at the drop of a hat with some of the things they read. I mean, I certainly have had (and still have) my fair share of times where I found myself angry at someone writing about outdated SEO methods that no longer work or those who somehow manage to amass thousands of followers based on pseudo SEO claims that are complete and utter showers of garbage. But then, there are those select few terrific individuals out there who are like the philosophers of SEO; always carrying a very bright and vivid torch which helps to reveal new passages and trains of thought.

Yes, there are those who solely write about SEO and they contribute greatly to the betterment of the industry; be it helping others to be the best possible SEOs they can be or brainstorming new ideas to help usher specific facets of the SEO industry into new territory. Some of them may not have the SEO experience, but they do have some type of marketing, analytical, and/or client relation/project management experience that really allows them to bring some fresh perspectives to the table. The great thing is that we all find our way to our favorites of these types of SEO writers and they end up becoming avenues for us to consistently rely upon for new ideas to try without having to invest the mental real estate to theorize on our own.

Now, that’s not to make doers of SEO appear as though they can’t theorize on their own, because nothing could be further from the truth. It’s just easier sometimes to spend an hour or two a day keeping up with industry news and reading your favorite bloggers instead of mentally hashing it out with yourself. SEO is an ever-evolving industry and tactics often shift despite the continuity of core best practices. It is in this where my next point lies.

Discussion is an absolute necessity in SEO. You can take 10 SEOs, give them the same exact list of 10 core principles, stick them on a computer and have them fix up a Web site and they’ll all have something unique to contribute to the conversation by the end of it. Maybe one person did something that worked better than another, or perhaps one person tried something that another tried, but with no success because they left a step out that the other didn’t. I think you can glean where I’m going with this point.

There’s the agency SEO, the freelance SEO, and the in-house SEO — all with their pros and cons which we would know nothing about without either working in all three positions or reading the words of those who have worked in any of them. Discussion gets people involved and creates dialogue, and when people start talking, others become interested. From there, conversation can either wither into incessant babbling or blossom into something much more productive and educational.

Unfortunately, such public discussion can sometimes cause a certain amount of confusion amongst newcomers to the industry. I recall first making my way into the realm of SEO and all I could see was a wide array of differing opinions on everything from core principles to theories I didn’t understand back then. Yes, only in the past year-and-a-half have I attained a very clear understanding of SEO, what works, what doesn’t, who has everyone’s best interests in mind, how to identify the BS artists, etc. I am continuously learning, and because of that, I feel like the confusion caused by differing opinions actually helps in one of two ways:

Either someone cares to delve into the issues further and maybe even get into testing things out for themselves, or someone gets turned off to the idea of SEO because of the foreboding look at how obfuscated the “correct opinion” appears to be.

Sometimes, though, you get these rogue, pseudo SEO marketers who learn just enough to be dangerous to an unsuspecting victim’s wallet. Those people are a disgrace to the industry and to all that is right in the world. These people do nothing but read what others have to say, then they pick and choose from the results of other people’s tests and they use them to appear authoritative and knowledgeable. While it’s easy for an SEO to spot these people, it’s not so easy for people who are new to the industry.

With that said, my ultimate opinion here is that the SEO industry operates better because of the variance between those who simply write about SEO and those who do SEO. I used to think there were too many people who rehashed the same content over and over again without actually contributing anything, but people will always need to read the basics and find their way into the industry somehow. As many of you know, there is certainly no shortage of entry points into the SEO industry — be it for better or for worse, depending on the context with which one enters the industry.

So, to wrap things up here, it’s true that SEO is perhaps one of the most convoluted industries around. It has people who know about SEO, people who know and write about SEO, people who know about and do SEO, and people who know, write about, and do SEO. When you take that full spectrum into consideration, there are people all along it who represent varying degrees of ethics, experience, agendas, etc. The end result is a melting pot of opinions in a thriving industry that stands to benefit from the collective intelligentsia despite the bad seeds encountered along the way.

I will be the first to admit that I do more writing about SEO than doing SEO these days, but because I have done SEO before and continue to do it in some form or fashion, I’m able to reach into my experience and put myself into positions of making use of the things I theorize or read about. As such, I always look to create clear discernment between when I write something based on theory versus when I write something based on experience. I feel that if more people who write about SEO would be more clear about the perspective from which they write, there would be less animosity between them and the doers of SEO who tend to know what really works, what doesn’t work, and when questions of ethics are actually warranted.

Since I have done SEO for a living before, I know that successful SEO typically comes down to the same rudimentary steps. Sure, there are always new theories and applications, but most all of them are simply extensions of the basics you’re already familiar with and most likely implementing with success (if you’re doing SEO for a living, that is). Not to mention, if you’re an SEO project manager and you’re juggling numerous clients, you don’t always have the mental capacity to sit down and constantly brainstorm/theorize new ideas. Heck, most of the time, you don’t even need to — especially if your toolkit is already extensive.

So, as a doer of SEO, I empathize with those who call “BS!” at the drop of a hat with some of the things they read. I mean, I certainly have had (and still have) my fair share of times where I found myself angry at someone writing about outdated SEO methods that no longer work or those who somehow manage to amass thousands of followers based on pseudo SEO claims that are complete and utter showers of garbage. But then, there are those select few terrific individuals out there who are like the philosophers of SEO; always carrying a very bright and vivid torch which helps to reveal new passages and trains of thought.

Yes, there are those who solely write about SEO and they contribute greatly to the betterment of the industry; be it helping others to be the best possible SEOs they can be or brainstorming new ideas to help usher specific facets of the SEO industry into new territory. Some of them may not have the SEO experience, but they do have some type of marketing, analytical, and/or client relation/project management experience that really allows them to bring some fresh perspectives to the table. The great thing is that we all find our way to our favorites of these types of SEO writers and they end up becoming avenues for us to consistently rely upon for new ideas to try without having to invest the mental real estate to theorize on our own.

Now, that’s not to make doers of SEO appear as though they can’t theorize on their own, because nothing could be further from the truth. It’s just easier sometimes to spend an hour or two a day keeping up with industry news and reading your favorite bloggers instead of mentally hashing it out with yourself. SEO is an ever-evolving industry and tactics often shift despite the continuity of core best practices. It is in this where my next point lies.

Discussion is an absolute necessity in SEO. You can take 10 SEOs, give them the same exact list of 10 core principles, stick them on a computer and have them fix up a Web site and they’ll all have something unique to contribute to the conversation by the end of it. Maybe one person did something that worked better than another, or perhaps one person tried something that another tried, but with no success because they left a step out that the other didn’t. I think you can glean where I’m going with this point.

There’s the agency SEO, the freelance SEO, and the in-house SEO — all with their pros and cons which we would know nothing about without either working in all three positions or reading the words of those who have worked in any of them. Discussion gets people involved and creates dialogue, and when people start talking, others become interested. From there, conversation can either wither into incessant babbling or blossom into something much more productive and educational.

Unfortunately, such public discussion can sometimes cause a certain amount of confusion amongst newcomers to the industry. I recall first making my way into the realm of SEO and all I could see was a wide array of differing opinions on everything from core principles to theories I didn’t understand back then. Yes, only in the past year-and-a-half have I attained a very clear understanding of SEO, what works, what doesn’t, who has everyone’s best interests in mind, how to identify the BS artists, etc. I am continuously learning, and because of that, I feel like the confusion caused by differing opinions actually helps in one of two ways:

Either someone cares to delve into the issues further and maybe even get into testing things out for themselves, or someone gets turned off to the idea of SEO because of the foreboding look at how obfuscated the “correct opinion” appears to be.

Sometimes, though, you get these rogue, pseudo SEO marketers who learn just enough to be dangerous to an unsuspecting victim’s wallet. Those people are a disgrace to the industry and to all that is right in the world. These people do nothing but read what others have to say, then they pick and choose from the results of other people’s tests and they use them to appear authoritative and knowledgeable. While it’s easy for an SEO to spot these people, it’s not so easy for people who are new to the industry.

With that said, my ultimate opinion here is that the SEO industry operates better because of the variance between those who simply write about SEO and those who do SEO. I used to think there were too many people who rehashed the same content over and over again without actually contributing anything, but people will always need to read the basics and find their way into the industry somehow. As many of you know, there is certainly no shortage of entry points into the SEO industry — be it for better or for worse, depending on the context with which one enters the industry.

So, to wrap things up here, it’s true that SEO is perhaps one of the most convoluted industries around. It has people who know about SEO, people who know and write about SEO, people who know about and do SEO, and people who know, write about, and do SEO. When you take that full spectrum into consideration, there are people all along it who represent varying degrees of ethics, experience, agendas, etc. The end result is a melting pot of opinions in a thriving industry that stands to benefit from the collective intelligentsia despite the bad seeds encountered along the way.

Top 13 Social Media Ranking Factors for SEO

Depending on who you speak to, search engine optimization (SEO) is either largely influenced or not at all influenced by social media. I'm sure everyone has their own opinions, case studies, and sites that show greater or lesser correlations between their social media engagement levels and their natural search results.

If you were to carry out an investigation into whether social media was a big influencing factor, which metrics would you want to monitor in order to base your insights on more empirical data?

I've put together a list of 13 ranking factors below. Feel free to use these and any others you can get your grubby SEO mitts on!

1. Number of Followers (Twitter)

You'll need your own corporate Twitter feed, which brings its own problems around brand protection and also the potential for dealing with customer service enquiries, but the more followers you have, the more authoritative your Twitter persona and the more value will be associated with your URL (assuming you have remembered to link to it).

2. Quality of Followers (Twitter)

The best followers are the ones with their own communities of followers. The more high value people who follow you, and retweet your stuff, the better.

3. Relevance of Followers (Twitter)

It's one thing getting followed and retweeted by Stephen Fry with over a million followers, but it's also important to get the same response from accounts that are more specific to your industry. Someone with "fashion" in their description who retweets your "20 percent off the new spring collection" offer is equally valuable.

4. Number of Retweets (Twitter)

Most likely as a ratio of tweets to retweets -- the more your content is reproduced by others the more authoritative it is. Obviously the more followers you have, the more likely you are to be retweeted. However, it isn't just about retweeting other people's content or dishing out promotions. It's about engaging in conversation with people in the industry.

5. Number of Fans (Facebook)

You'll need to create your own corporate profile on Facebook, which brings the same potential banana skins as a corporate Twitter feed, only multiplied numerous times due to the sheer level of engagement of people on Facebook. However, if you decide to engage with customers and potential customers on Facebook, the total number of likes your page receives will add value to your URL.

6. Number of Comments (Facebook)

A large number of likes, but little engagement, is a sure sign of someone gaming the system. People will tend to like you if you talk to them. Successful Facebook pages include a lot of content written by other people.

7. Number of Views (YouTube)

An obvious one, but any content you upload to YouTube should link to your site in the description, and the more times it is viewed, the more value will be attributed to your video.

8. User Comments (YouTube)

YouTube is also about engaging with other YouTubers and commenting on popular videos. The more you comment, the more link juice is passed back to your profile.

9. References From Independent Profiles (YouTube)

Using YouTube can bring in some really good authority if done brilliantly -- if your link from your video passes some value, imagine how much more value would be passed if you could get other people to parody your work and include links to you from their profiles. The prime example remains the Cadbury's Gorilla, but there are lots of interesting mini-campaigns trying to leverage the above.

10. Title of Video (YouTube)

Any references to your target keywords in the title of the video will help ensure that any authority passed will be relevant to a specific theme. Keywords should also be in the tags and or transcript where possible.

11. Percent of Likes vs. Dislikes (YouTube)

Easy one. The more liked your content is, the more authoritative it is.

12. Positive vs. Negative Brand Mentions (All Social Media)

Use a tool like Radian6, or a free tool, and ensure that you have significantly more positive brand mentions than negative. It won't be 100 percent accurate as these things don't pick up on sarcasm. But Google has already made investment in this area in 2011, so it's well worth monitoring.

13. Number of Social Mentions (All Potential Media)

Total visibility across all social media shows that your content is important to all people and not just a result of a large special offer for Facebook/Twitter users. HowSociable is a simple way of giving yourself a rating here.

If you were to carry out an investigation into whether social media was a big influencing factor, which metrics would you want to monitor in order to base your insights on more empirical data?

I've put together a list of 13 ranking factors below. Feel free to use these and any others you can get your grubby SEO mitts on!

1. Number of Followers (Twitter)

You'll need your own corporate Twitter feed, which brings its own problems around brand protection and also the potential for dealing with customer service enquiries, but the more followers you have, the more authoritative your Twitter persona and the more value will be associated with your URL (assuming you have remembered to link to it).

2. Quality of Followers (Twitter)

The best followers are the ones with their own communities of followers. The more high value people who follow you, and retweet your stuff, the better.

3. Relevance of Followers (Twitter)

It's one thing getting followed and retweeted by Stephen Fry with over a million followers, but it's also important to get the same response from accounts that are more specific to your industry. Someone with "fashion" in their description who retweets your "20 percent off the new spring collection" offer is equally valuable.

4. Number of Retweets (Twitter)

Most likely as a ratio of tweets to retweets -- the more your content is reproduced by others the more authoritative it is. Obviously the more followers you have, the more likely you are to be retweeted. However, it isn't just about retweeting other people's content or dishing out promotions. It's about engaging in conversation with people in the industry.

5. Number of Fans (Facebook)

You'll need to create your own corporate profile on Facebook, which brings the same potential banana skins as a corporate Twitter feed, only multiplied numerous times due to the sheer level of engagement of people on Facebook. However, if you decide to engage with customers and potential customers on Facebook, the total number of likes your page receives will add value to your URL.

6. Number of Comments (Facebook)

A large number of likes, but little engagement, is a sure sign of someone gaming the system. People will tend to like you if you talk to them. Successful Facebook pages include a lot of content written by other people.

7. Number of Views (YouTube)

An obvious one, but any content you upload to YouTube should link to your site in the description, and the more times it is viewed, the more value will be attributed to your video.

8. User Comments (YouTube)

YouTube is also about engaging with other YouTubers and commenting on popular videos. The more you comment, the more link juice is passed back to your profile.

9. References From Independent Profiles (YouTube)

Using YouTube can bring in some really good authority if done brilliantly -- if your link from your video passes some value, imagine how much more value would be passed if you could get other people to parody your work and include links to you from their profiles. The prime example remains the Cadbury's Gorilla, but there are lots of interesting mini-campaigns trying to leverage the above.

10. Title of Video (YouTube)

Any references to your target keywords in the title of the video will help ensure that any authority passed will be relevant to a specific theme. Keywords should also be in the tags and or transcript where possible.

11. Percent of Likes vs. Dislikes (YouTube)

Easy one. The more liked your content is, the more authoritative it is.

12. Positive vs. Negative Brand Mentions (All Social Media)

Use a tool like Radian6, or a free tool, and ensure that you have significantly more positive brand mentions than negative. It won't be 100 percent accurate as these things don't pick up on sarcasm. But Google has already made investment in this area in 2011, so it's well worth monitoring.

13. Number of Social Mentions (All Potential Media)

Total visibility across all social media shows that your content is important to all people and not just a result of a large special offer for Facebook/Twitter users. HowSociable is a simple way of giving yourself a rating here.

Types of Website Site Changes that Affects Search Engine Optimization

Your log should track all the changes to the website, not just those that were made with web marketing in mind. Organizations make many changes that they do not think will effect search engine optimization, but they have a big impact on it. Here are some examples.

1) Content areas/features/options added to the site this could be anything from new blog to new categorization system.

2 ) Changing the domain of your website. This can have a significant impact, and you should document when the switchover was made.

3) Modifications in the website URL & structures of website designing Changes to URL on your site will likely impact on your internet marketing rankings, so record any and all changes.

4) Implementing a new dynamic website or Content Management System, this is a big one, with a very big impact. If you must change your website CMS, make sure you do through analysis of the online marketing shortcoming of the new CMS versus the old one. And make sure you track the timing and the impact.

5) New partnerships that either send link or require them (meaning your site is earning new links or linking out to new place)

6) Changes to navigation/ menu system (moving links around on pages. creating new link system, etc).

7) Any redirects, either to or from the site.

8) Upticks in usage/traffic and the source (e.g if you get mentioned in the press and receive an influx of traffic from it)

When you track these items, you can create an accurate shoreline to help correlate causes with effects. If for example, you we observe red a spike in traffic from yahoo that started four to five days after you switched from menu links in the footer to the header, it is a likely indicator of a causal relationship.

Without such documentation it could be months before you notice the surge and there would be no way to trace it back to the responsible modification. Your web design team might later choose to switch back to footer links, your traffic may fall and no record would exist to help you understand why. Without the lesson of his history, you are doomed to repeat the same mistakes.

1) Content areas/features/options added to the site this could be anything from new blog to new categorization system.

2 ) Changing the domain of your website. This can have a significant impact, and you should document when the switchover was made.

3) Modifications in the website URL & structures of website designing Changes to URL on your site will likely impact on your internet marketing rankings, so record any and all changes.

4) Implementing a new dynamic website or Content Management System, this is a big one, with a very big impact. If you must change your website CMS, make sure you do through analysis of the online marketing shortcoming of the new CMS versus the old one. And make sure you track the timing and the impact.

5) New partnerships that either send link or require them (meaning your site is earning new links or linking out to new place)

6) Changes to navigation/ menu system (moving links around on pages. creating new link system, etc).

7) Any redirects, either to or from the site.

8) Upticks in usage/traffic and the source (e.g if you get mentioned in the press and receive an influx of traffic from it)

When you track these items, you can create an accurate shoreline to help correlate causes with effects. If for example, you we observe red a spike in traffic from yahoo that started four to five days after you switched from menu links in the footer to the header, it is a likely indicator of a causal relationship.

Without such documentation it could be months before you notice the surge and there would be no way to trace it back to the responsible modification. Your web design team might later choose to switch back to footer links, your traffic may fall and no record would exist to help you understand why. Without the lesson of his history, you are doomed to repeat the same mistakes.

SEO Audits – Strategic vs. Tactical

For many years I operated in a bubble performing audits on client sites. Coordinating and providing consulting in the implementation of my findings, yet not ever having an open dialogue with other professionals in the industry regarding what commonalities or differences we had in our approach.

As I began writing blog articles on my approach, and fielding questions from others regarding how I went about the process, I began to learn of two typical approaches.

One involves a quick audit, an hour or two, where the most common mistakes or issues are found, followed by broad recommendations. On the other end of the extreme, someone will spend countless hours auditing every detail, examining every page and inbound link, leading to a 50 page report, replete with complex Excel spreadsheets and analytics reports.

Personally, I take a different approach, one that works well for my needs, though it may not work for yours.

Strategic SEO Audits

The vast majority of my work these days involves strategic audits. Depending on the size and complexity of a site, I’ll spend anywhere from a few to several hours examining various aspects of a site revolving around how those aspects affect information architecture, content organization, and topical focus. While I’m doing this review, I consider indexation barriers, usability, and accessibility.

I then spend anywhere from a few minutes upwards of a couple hours examining the sites inbound link profile, considering total links, total root domains, link to root ratio, and scanning the source domains for patterns regarding domain families, domain types, keyword vs. brand anchor patterns, and overall inbound link health.

I also spend a few minutes up to an hour or so reviewing the competitive landscape and set up one or more sweet spot charts, looking for areas of weakness in the landscape. This is critical to my process because it shows me where opportunities exist to overcome competitive difficulty with the least amount of effort for the most value.

When appropriate, I’ll also review social media factors. Here, I’ll spend anywhere from a few minutes to upwards of an hour at most.

Patterns Reveal Bigger Problems

As I’ve communicated in several articles, I look for patterns in my audit reviews. If I find three or five pages on a site that have problems with any area, my experience tells me that this is something that needs addressing. Yet once I discover an area of concern, I don’t dig too much deeper at this point in my strategic audits. Instead, I record the information, describe the problem, describe why it’s a problem, and offer a few examples of it along with examples of how it can be resolved.

By the time I’m done with this process, I typically end up with a 10, 15, or 20 page document. A road map to resolving issues, that shows where energy needs to be applied. I don’t however, go beyond this much effort during a strategic audit.

Time is Valuable

I don’t go beyond the above described effort in a strategic audit for several reasons. First and foremost, I’ve contracted for a fixed bid audit. And with so many issues to consider, it’s too easy to get bogged down in any single factor and quickly use up all the time I’ve allocated. As much as I want to go the extra mile for my clients, I’ve come to learn that I’ve got a business to run, and my time is very precious in that regard.

Another reality I’ve found is if I present too much information to site owners and managers in my first audit, they rapidly become overwhelmed, discouraged and otherwise disheartened. By keeping my audits concise from this perspective, it’s enough to wake them up to real problems. It provides them enough education to help them accept the seriousness of issues revealed, and builds a level of trust and respect for the next step, implementing tactical SEO.

Tactical Audits

When I’ve presented a strategic audit and we’ve had a follow-up discussion regarding my findings, the next question from clients that comes naturally from that process is – “Where do we begin?”. If the client has an in-house person or team, or an existing vendor, they often have the ability to determine where to begin and how to go about the work. Or I might provide them a copy of my Guide to SEO for Content Writing.

If they need guidance or call upon my team and I to collaborate in the implementation, which is usually the case with clients facing extreme competition, here’s where it’s time to roll up my sleeves and get tactical.

Prioritization

Every site audit reveals different unique concerns and needs. Some sites might need to focus mostly on inbound link factors, others on-site factors, and others still, social media signals. Most of the time its a hybrid combination. Since I’m not an industry leading link building authority, or social media thought leader, I leave the tactical audit work in those areas to others I recommend – people and companies who are as passionate as I am but in their own area of expertise.

Defining What’s Important

When it comes to on-site factors, a typical tactical audit process happens in stages, and can most often be broken out into phases over time. There may be issues a sites developer or development team can resolve – these can include resolving on site 301 Redirect issues, duplicate content caused by other sites they own that should be eliminated, or architectural speed issues for example.

What I encounter most often involves keyword assignment and topical dilution issues related to those assignments.

Audits Addressing Topical Dilution

Quite often the first tactical audit requires one or more discussions with the client to help more clearly define the highest value and most important areas, sections and pages on their site.

What comes from that dialogue involves my need to validate or invalidate the clients beliefs. Is this topic really the most valuable? Does this section of the site really have the most potential for long-term goal achievement? Could there be content stuck down one level that is more important than it’s given recognition for?

Start at the Top

Once these considerations are made, reviewed and confirmed, we can begin the work of final keyword assignment. Depending on the size of the site, this usually involves only a tiny fraction of all the sites pages. (remember – I work on sites with tens or hundreds of thousands or even millions of pages.)

Perhaps it’s all of the main navigation linked pages, or those, along with all or a portion of the product or service categories. Rarely does it involve sub-category level or lower pages at this point.

My best weapon in this process is an Excel spreadsheet. In this spreadsheet I’ll have two, three or more tabs depending on how far this first tactical audit goes.

Required Tabs

In a tactical audit, the following tabs are required:

* Pages Evaluated

* Topical Organization

* Keyword Assignments

Pages Evaluated Tab

Columns included in this tab include:

* Page Name

* URL

* Topical Focus

In this tab, I list all the pages I’ve evaluated – pages the client thinks are valuable. While my goal might be to make recommendations on only 10 or twenty high level pages, if I think pages these link to need to be reviewed, I’ll include those in this tab. Which means for this tab, I might look at upwards of a couple hundred pages. Doing so sometimes shows me pages that are buried that need to be brought up to a higher level.

I then review the top pages in that list to examine their individual page optimization, as well as cross-page optimization. I do this to determine whether there’s topical dilution at the page and cross-page level, and whether they’re grouped together properly or need to be moved to a different location.

Topical Organization Tab

In this tab, columns inlcude:

* Top Tier Content

* Second Tier Content

* Third Tier Content

* Existing URL

* Reorganization Notes

In this tab, I’ll typically include ten, twenty, or in rare situations, upwards of 100 or so pages. I reorganize and rearrange, if needed, the content from the first tab just carrying over the individual page names, or their re-assigned page names (from a topical focus perspective) – separating things out for better or more refined topical focus, and designate which of these is truly the most important content, which should be consolidated into other pages, and which can be relegated to lesser importance.

Keyword Assignments Tab

This tab typically includes the following columns:

* Page Name

* Topical Focus

* Existing URL

* Top Two Phrases

* Secondary Phrases

* New URL

* H1

Now depending on how big or complex the site is, or how much time has been allocated for this audit, I’ll usually only include information in this tab for the top ten, fifteen or twenty most important pages as revealed through processing the first two tabs.

Optional Columns

Optional columns I sometimes include in the keyword assignment tab include:

* Page Title

* Meta Description

* Paragraph Content

* Sectional Navigation Re-write

* Breadcrumb Navigation

I’ll include these optional columns if the client prefers I take it this far, such is often the case when they want to ensure the actual final step optimization is better served by being performed by me. Otherwise I leave it to their team or 3rd party vendor.

In these optional columns I do the actual work of re-writing the page Title, meta Description, actual on-page content, and if appropriate, I will also provide the new recommended section level navigation, and individual page level breadcrumb trail, though these elements typically only come along when I’m performing specific section level tactical audits.

How Far You Go is Up to You

By this point, I’ve expended anywhere from five to fifteen hours, the most I’ll put in for any single client tactical audit in any given month. This alone usually gives site owners, content managers and/or developers enough work to deal with in actually implementing and applying my recommendations in between all the other work they’ve got on their plates. If you want to do more at this point, feel free to have at it.

Subsequent Tactical Audits

Once that first round is completed and the work has been applied, I then go into sectional audits – taking on one section of a site at a time. If it’s a complex and deep section, I’ll break it down, starting with the most important content in that section (or the most perceived important content) and go through the same tactical evaluation and refinement process I’ve described above.

The beauty of this multi-stage or multi-phase approach to tactical audits comes from the fact that we’re going to see improvements right away – and with each additional tactical audit being implemented, it’s a building-block process to success.

As I began writing blog articles on my approach, and fielding questions from others regarding how I went about the process, I began to learn of two typical approaches.

One involves a quick audit, an hour or two, where the most common mistakes or issues are found, followed by broad recommendations. On the other end of the extreme, someone will spend countless hours auditing every detail, examining every page and inbound link, leading to a 50 page report, replete with complex Excel spreadsheets and analytics reports.

Personally, I take a different approach, one that works well for my needs, though it may not work for yours.

Strategic SEO Audits

The vast majority of my work these days involves strategic audits. Depending on the size and complexity of a site, I’ll spend anywhere from a few to several hours examining various aspects of a site revolving around how those aspects affect information architecture, content organization, and topical focus. While I’m doing this review, I consider indexation barriers, usability, and accessibility.

I then spend anywhere from a few minutes upwards of a couple hours examining the sites inbound link profile, considering total links, total root domains, link to root ratio, and scanning the source domains for patterns regarding domain families, domain types, keyword vs. brand anchor patterns, and overall inbound link health.

I also spend a few minutes up to an hour or so reviewing the competitive landscape and set up one or more sweet spot charts, looking for areas of weakness in the landscape. This is critical to my process because it shows me where opportunities exist to overcome competitive difficulty with the least amount of effort for the most value.

When appropriate, I’ll also review social media factors. Here, I’ll spend anywhere from a few minutes to upwards of an hour at most.

Patterns Reveal Bigger Problems

As I’ve communicated in several articles, I look for patterns in my audit reviews. If I find three or five pages on a site that have problems with any area, my experience tells me that this is something that needs addressing. Yet once I discover an area of concern, I don’t dig too much deeper at this point in my strategic audits. Instead, I record the information, describe the problem, describe why it’s a problem, and offer a few examples of it along with examples of how it can be resolved.

By the time I’m done with this process, I typically end up with a 10, 15, or 20 page document. A road map to resolving issues, that shows where energy needs to be applied. I don’t however, go beyond this much effort during a strategic audit.

Time is Valuable

I don’t go beyond the above described effort in a strategic audit for several reasons. First and foremost, I’ve contracted for a fixed bid audit. And with so many issues to consider, it’s too easy to get bogged down in any single factor and quickly use up all the time I’ve allocated. As much as I want to go the extra mile for my clients, I’ve come to learn that I’ve got a business to run, and my time is very precious in that regard.

Another reality I’ve found is if I present too much information to site owners and managers in my first audit, they rapidly become overwhelmed, discouraged and otherwise disheartened. By keeping my audits concise from this perspective, it’s enough to wake them up to real problems. It provides them enough education to help them accept the seriousness of issues revealed, and builds a level of trust and respect for the next step, implementing tactical SEO.

Tactical Audits

When I’ve presented a strategic audit and we’ve had a follow-up discussion regarding my findings, the next question from clients that comes naturally from that process is – “Where do we begin?”. If the client has an in-house person or team, or an existing vendor, they often have the ability to determine where to begin and how to go about the work. Or I might provide them a copy of my Guide to SEO for Content Writing.

If they need guidance or call upon my team and I to collaborate in the implementation, which is usually the case with clients facing extreme competition, here’s where it’s time to roll up my sleeves and get tactical.

Prioritization

Every site audit reveals different unique concerns and needs. Some sites might need to focus mostly on inbound link factors, others on-site factors, and others still, social media signals. Most of the time its a hybrid combination. Since I’m not an industry leading link building authority, or social media thought leader, I leave the tactical audit work in those areas to others I recommend – people and companies who are as passionate as I am but in their own area of expertise.

Defining What’s Important

When it comes to on-site factors, a typical tactical audit process happens in stages, and can most often be broken out into phases over time. There may be issues a sites developer or development team can resolve – these can include resolving on site 301 Redirect issues, duplicate content caused by other sites they own that should be eliminated, or architectural speed issues for example.

What I encounter most often involves keyword assignment and topical dilution issues related to those assignments.

Audits Addressing Topical Dilution

Quite often the first tactical audit requires one or more discussions with the client to help more clearly define the highest value and most important areas, sections and pages on their site.

What comes from that dialogue involves my need to validate or invalidate the clients beliefs. Is this topic really the most valuable? Does this section of the site really have the most potential for long-term goal achievement? Could there be content stuck down one level that is more important than it’s given recognition for?

Start at the Top

Once these considerations are made, reviewed and confirmed, we can begin the work of final keyword assignment. Depending on the size of the site, this usually involves only a tiny fraction of all the sites pages. (remember – I work on sites with tens or hundreds of thousands or even millions of pages.)

Perhaps it’s all of the main navigation linked pages, or those, along with all or a portion of the product or service categories. Rarely does it involve sub-category level or lower pages at this point.

My best weapon in this process is an Excel spreadsheet. In this spreadsheet I’ll have two, three or more tabs depending on how far this first tactical audit goes.

Required Tabs

In a tactical audit, the following tabs are required:

* Pages Evaluated

* Topical Organization

* Keyword Assignments

Pages Evaluated Tab

Columns included in this tab include:

* Page Name

* URL

* Topical Focus

In this tab, I list all the pages I’ve evaluated – pages the client thinks are valuable. While my goal might be to make recommendations on only 10 or twenty high level pages, if I think pages these link to need to be reviewed, I’ll include those in this tab. Which means for this tab, I might look at upwards of a couple hundred pages. Doing so sometimes shows me pages that are buried that need to be brought up to a higher level.

I then review the top pages in that list to examine their individual page optimization, as well as cross-page optimization. I do this to determine whether there’s topical dilution at the page and cross-page level, and whether they’re grouped together properly or need to be moved to a different location.

Topical Organization Tab

In this tab, columns inlcude:

* Top Tier Content

* Second Tier Content

* Third Tier Content

* Existing URL

* Reorganization Notes

In this tab, I’ll typically include ten, twenty, or in rare situations, upwards of 100 or so pages. I reorganize and rearrange, if needed, the content from the first tab just carrying over the individual page names, or their re-assigned page names (from a topical focus perspective) – separating things out for better or more refined topical focus, and designate which of these is truly the most important content, which should be consolidated into other pages, and which can be relegated to lesser importance.

Keyword Assignments Tab

This tab typically includes the following columns:

* Page Name

* Topical Focus

* Existing URL

* Top Two Phrases

* Secondary Phrases

* New URL

* H1

Now depending on how big or complex the site is, or how much time has been allocated for this audit, I’ll usually only include information in this tab for the top ten, fifteen or twenty most important pages as revealed through processing the first two tabs.

Optional Columns

Optional columns I sometimes include in the keyword assignment tab include:

* Page Title

* Meta Description

* Paragraph Content

* Sectional Navigation Re-write

* Breadcrumb Navigation

I’ll include these optional columns if the client prefers I take it this far, such is often the case when they want to ensure the actual final step optimization is better served by being performed by me. Otherwise I leave it to their team or 3rd party vendor.

In these optional columns I do the actual work of re-writing the page Title, meta Description, actual on-page content, and if appropriate, I will also provide the new recommended section level navigation, and individual page level breadcrumb trail, though these elements typically only come along when I’m performing specific section level tactical audits.

How Far You Go is Up to You

By this point, I’ve expended anywhere from five to fifteen hours, the most I’ll put in for any single client tactical audit in any given month. This alone usually gives site owners, content managers and/or developers enough work to deal with in actually implementing and applying my recommendations in between all the other work they’ve got on their plates. If you want to do more at this point, feel free to have at it.

Subsequent Tactical Audits

Once that first round is completed and the work has been applied, I then go into sectional audits – taking on one section of a site at a time. If it’s a complex and deep section, I’ll break it down, starting with the most important content in that section (or the most perceived important content) and go through the same tactical evaluation and refinement process I’ve described above.

The beauty of this multi-stage or multi-phase approach to tactical audits comes from the fact that we’re going to see improvements right away – and with each additional tactical audit being implemented, it’s a building-block process to success.

Searchers barely even glance at sponsored ads on Bing, Google

The new eye-tracking study by user experience firm User Centric shows that the majority of search engine users don't even so much as glance at the sponsored ad listings on the right hand side of the results page.

The study used search engines Bing and Google to see just how many search engine users paid attention to ads. While organic search results were viewed 100% of the time, only around 1 in 4 paid any attention to the sponsored ad listings.

Fewer Bing users (21%) than Google users (28%) looked at the ads, found User Centric. Even when looked at ads were afforded but a quick glance, averaging around 1 second compared with the 14.7 seconds (Google) and 10.7 seconds (Bing) users spent resting their eyes on organic listings.

"The results of this study suggest that advertisers should place their ads above the organic search results, where the hit rate was more than three times higher and gaze time more than five times longer than on the sponsored links on the right," concludes User Centric.

"When deciding between Bing and Google, advertisers should keep in mind that, ad placement among the top sponsored results on Google attracted 22% more attention than an equivalent placement on Bing."

Ad blindness on the Internet isn't a new phenomenon. At the end of last year a study from Adweek Media and Harris Poll found that, despite an increase in innovation and creativity in online advertising, many consumers are blind to banner ads.

The results of their study of 2,098 U.S. adults also didn't bode well for search engine ads which were cited as being the second-most ignored ads of all channels, with 20% ignoring them.

The study used search engines Bing and Google to see just how many search engine users paid attention to ads. While organic search results were viewed 100% of the time, only around 1 in 4 paid any attention to the sponsored ad listings.

Fewer Bing users (21%) than Google users (28%) looked at the ads, found User Centric. Even when looked at ads were afforded but a quick glance, averaging around 1 second compared with the 14.7 seconds (Google) and 10.7 seconds (Bing) users spent resting their eyes on organic listings.

"The results of this study suggest that advertisers should place their ads above the organic search results, where the hit rate was more than three times higher and gaze time more than five times longer than on the sponsored links on the right," concludes User Centric.

"When deciding between Bing and Google, advertisers should keep in mind that, ad placement among the top sponsored results on Google attracted 22% more attention than an equivalent placement on Bing."

Ad blindness on the Internet isn't a new phenomenon. At the end of last year a study from Adweek Media and Harris Poll found that, despite an increase in innovation and creativity in online advertising, many consumers are blind to banner ads.

The results of their study of 2,098 U.S. adults also didn't bode well for search engine ads which were cited as being the second-most ignored ads of all channels, with 20% ignoring them.

Subscribe to:

Posts (Atom)